The concept of the backpropagation neural network was introduced in the 1960s and later it was published by David Rumelhart, Ronald Williams, and Geoffrey Hinton in the famous 1986 paper. They explained various neural networks and concluded that network training is done through backpropagation.

Backpropagation is widely used in neural network training and calculates the loss function with respect to the weights of the network. It functions with a multi-layer neural network and observes the internal representations of input-output mapping. This article gives an overview of the backpropagation neural network along with its advantages and disadvantages.

What is an Artificial Neural Network?

The collection of connected sections is known as a neural network. Each connection is associated with a specific weight. This type of network helps in constructing predictive models based on large data sets. The working of this system is similar to the human nervous system, which can help in recognition and understanding of images, learns like a human, synthesizes speech, and many others.

Artificial Neural Network

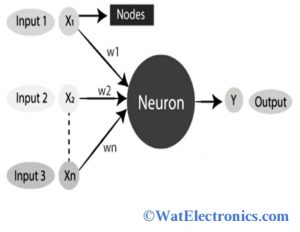

An artificial neural network is a collection of groups of connected input/output units, where each connection is associated with specific weights with its computer programs. It is simply called a neural network. This type of network is derived from biological neural networks, which have neurons that are interconnected with each other in various network layers. Here neurons are represented as nodes. The artificial neural network can be understood with the help of the diagram shown bel

An artificial neural network is used in the field of artificial intelligence, where it copies the network of neurons and builds up a human brain. So that computers can understand things and make decisions like a human. It is designed by programming computers, which act like interconnected brain cells.

An artificial neural network can be understood with a digital logic gate example. Consider an OR gate with two inputs and one output. If both or one of the inputs is On, then the output will be ON. If both the inputs are Off, then the output will be OFF. Hence for a given input, we will get output based on the input. The relationship between the inputs and outputs changes due to the neurons in our brain, which are learning.

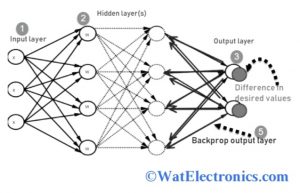

The architecture of the artificial neural network shown above consists of 3 layers. They are the input layer, hidden layer, and output layer.

Input Layer: This layer receives several inputs from different formats provided by the programmer.

Hidden Layer: This layer is located between the input layer and output layer. It is used to perform calculations to determine hidden features and patterns. It computes the error obtained in the calculated output.

Output Layer: A series of transformations are done in the input layer by using a hidden layer and the final desired output is obtained in the output layer.

What is Backpropagation?

The term backpropagation is referred to as backward propagation of errors. It is the heart of neural network training. In this concept, fine-tuning of weights of a neural network is based on the error rate determined in the previous iteration or run. An error rate is reduced by proper tuning of weights and the model becomes more reliable by increasing its generalization.

It is a standard form of artificial network training, which helps to calculate gradient loss function with respect to all weights in the network. The backpropagation algorithm is used to train a neural network more effectively through a chain rule method. That means, after each forward, the backpropagation executes backward pass through a network by adjusting the parameters of the model.

How backpropagation Works – Simple Algorithm

The backpropagation works on 4 layers. They are the input layer, hidden layer, hidden layer 2, and final output layer. Hence, it has 3 main layers. They are

- Input layer

- Hidden layer and

- Output layer

Each layer works independently in its way to get the desired output and the scenarios correspond to our conditions.

Working of Backpropagation

The working of backpropagation can be explained from the figure shown below.

- The input layer receives the inputs X through the preconnected path

- Input is customized by using actual weights ‘W’, where the weights are selected randomly.

- Output is calculated for every neuron from the input layer, at the hidden layer and the output data has arrived at the output layer

- Evaluate the errors obtained from the outputs.

- To decrease the error, adjust the weights by going back to the hidden layer from the output layer.

- Repeat the process until the desired output is obtained.

The difference between the actual output and the desired output is used to calculate errors obtained in the result.

Error = actual output – desired output.

Why We Need Backpropagation?

The backpropagation technology helps to adjust the weights of the network connections to minimize the difference between the actual output and the desired output of the net, which is calculated as a loss function.

- Helps to simplify the network structure by removing the weighted links, so that the trained network will have the minimum effect

- This method is especially applicable in deep neural networks, which work on error-prone projects like speech and image recognition.

- It functions with multiple inputs using chain rules and power rules.

- It is used to calculate the gradient of the loss function with respect to all the weights in the network.

- Minimizes the loss function by updating the weights with the gradient optimization method.

- Modifies the weights of the connected nodes during the process of training to produce ‘learning’.

- This method is iterative, recursive, and more efficient.

Types of Backpropagation Neural Network

The backpropagation neural network is classified into two types. They are,

Static Back Propagation Neural Network

In this type of backpropagation, the static output is generated due to the mapping of static input. It is used to resolve static classification problems like optical character recognition.

Recurrent Backpropagation Neural Network

The Recurrent Propagation is directed forward or conducted until a certain determined value or threshold value is reached. After the certain value, the error is evaluated and propagated backward.

The key difference between these two types is; mapping is static and fast in static backpropagation while in recurrent backpropagation it is non-static.

Advantages/Disadvantages

The advantages of backpropagation neural networks are given below,

- It is very fast, simple, and easy to analyze and program

- Apart from no of inputs, it doesn’t contain any parameters for tuning

- This method is flexible and there is no need to acquire more knowledge about the network.

- This is a standardized method and works very efficiently.

- It doesn’t require any special features of the function.

The disadvantages of backpropagation neural networks are given below,

- The function or performance of the backpropagation network on a certain issue depends on the data input.

- These types of networks are very sensitive to noisy data.

- The matrix-based approach is used instead of a mini-batch.

Please refer to this link to know more about Bridge in Computer Network.

Please refer to this link to know more about Backpropagation Neural Network Algorithm MCQs

Thus, this is all about an overview of Backpropagation Neural Network, which includes artificial neural network, backpropagation, working of backpropagation with a simple algorithm, need of backpropagation, advantages, and disadvantages of backpropagation neural network. The backpropagation algorithm is mainly used in character mapping, speech, character and face recognition, and artificial neural network training. Here is a question for you, “What is a feedforward neural network?”